The Journey of Corpus

April 29th, 2021 by Ana PereiraHow we established a team with a clear focus on data governance (and exactly what that means), allowing us to leverage the vast data available to SoundCloud — as the world’s largest open audio platform — to become a more data-driven company.

Testing SQL for BigQuery

October 16th, 2020 by Barbara Scherlein“To me, legacy code is simply code without tests.” — Michael Feathers

If untested code is legacy code, why aren’t we testing data pipelines or ETLs (extract, transform, load)? In particular, data pipelines built in SQL are rarely tested. However, as software engineers, we know all our code should be tested. So in this post, I’ll describe how we started testing SQL data pipelines at SoundCloud.

How (Not) to Build Datasets and Consume Data at Your Company

March 3rd, 2020 by George RolduginTwo years ago, if an engineering team at SoundCloud needed data produced by another team, in many cases, that team would have had to start by building a dataset from the raw data. That meant there was no standard way to structure data into a dataset, there were errors in understanding and interpreting raw data, and there was no reuse of datasets across teams. Ownership of datasets was with the teams consuming data, even when the data itself was owned and best understood by someone else. By agreeing on an ownership strategy, a common shape for datasets, and standard tooling, SoundCloud was able to streamline these problems away. Now the data and datasets are provided by those who know best about their data, along with the help of tooling built by those who know best about building tools. Read on to learn what datasets look like at SoundCloud and how they are built.

Keeping Counts In Sync

May 11th, 2018 by Lorand KaslerTrack play counts are essential for providing a good creator experience on the SoundCloud platform. They not only help creators keep track of their most popular songs, but they also give creators a better understanding of their fanbase and global impact. This post is a continuation of an earlier post that discussed what we do at SoundCloud to ensure creators get their play stats (along with their other stats), both reliably and in real time.

PageRank in Spark

January 24th, 2018 by Josh DevinsSoundCloud consists of hundreds of millions of tracks, people, albums, and playlists, and navigating this vast collection of music and personalities poses a large challenge, particularly with so many covers, remixes, and original works all in one place.

SoundCloud's Data Science Process

October 4th, 2017 by Josh DevinsHere at SoundCloud, we’ve been working on helping our Data Scientists be more effective, happy, and productive. We revamped our organizational structure, clearly defined the role of a Data Scientist and a Data Engineer, introduced working groups to solve common problems (like this), and positioned ourselves to do incredible work! Most recently, we started thinking about the work that a Data Scientist does, and how best to describe and share the process that we use to work on a business problem. Based on the experiences of our Data Scientists, we distilled a set of steps, tips and general guidance representing the best practices that we collectively know of and agree to as a community of practitioners.

A Better Model of Data Ownership

June 20th, 2017 by Joe KearneyOnce upon a time, we had a single monolith of software, one mothership running everything. At SoundCloud, the proliferation of microservices came from moving functionality out of the mothership. There are plenty of benefits to splitting up features in this way. We want the same benefits for our data as well, by defining ownership of datasets and ensuring that the right teams own the right datasets.

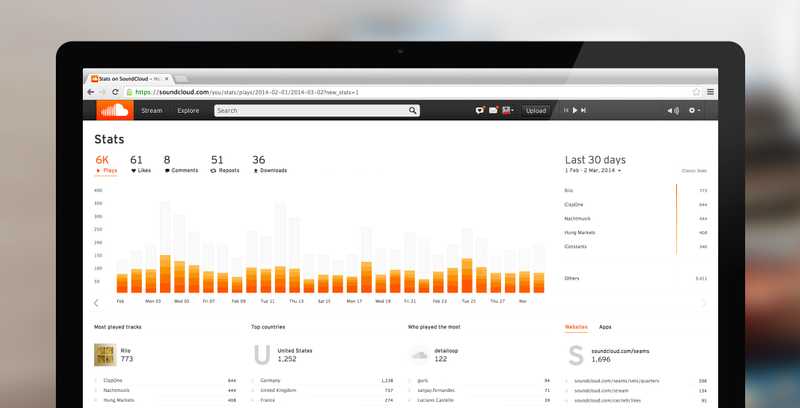

Real-Time Counts with Stitch

July 3rd, 2014 by Emily GreenHere at SoundCloud, in order to provide counts and a time series of counts in real time, we created something called Stitch.

Stitch was initially developed to provide timelines and counts for our stats pages, which are where users can see which of their tracks are played and when.

Stitch is a wrapper around a Cassandra database. It has a web application that provides read access to the counts through an HTTP API. The counts are written to Cassandra in two distinct ways, and it’s possible to use…

MySQL for Statistics – Old Faithful

July 5th, 2011 by Sean TreadwayMySQL turns out to be a good Swiss Army Knife for persistence, if used wisely. Understanding disk access patterns driven by your storage engine is key. Choosing a read or write optimized disk layout will get you very far. We chose a read-optimized disk layout using InnoDB and MySQL for statistics.

While our wheels were spinning trying to find out why our statistics storage patterns were causing MongoDB to thrash our disks, we started looking for an emergency alternative with the technology that…