SoundCloud's Data Science Process

Here at SoundCloud, we’ve been working on helping our Data Scientists be more effective, happy, and productive. We revamped our organizational structure, clearly defined the role of a Data Scientist and a Data Engineer, introduced working groups to solve common problems (like this), and positioned ourselves to do incredible work! Most recently, we started thinking about the work that a Data Scientist does, and how best to describe and share the process that we use to work on a business problem. Based on the experiences of our Data Scientists, we distilled a set of steps, tips and general guidance representing the best practices that we collectively know of and agree to as a community of practitioners.

The process represents an informal agreement amongst the team on how we accomplish data science objectives, and it serves as a basis with which to reflect on how to improve ourselves and our work. When we work on tasks, we use the process to bring higher levels of quality to our work and to improve our ability to communicate with our peers on progress, successes, and failures. The process itself is iterated on and adapted as we see systematic failures appearing in our work, or ways in which we can improve on our work.

One thing worth highlighting is the iterative nature of our work here at SoundCloud. We work daily with Product Managers, Designers, and Engineers and we do so in a highly collaborative and iterative way. As such, we have designed our process to not only allow for iterative work, but to embrace it as a fundamental principle. We aim to deliver high-value work on a regular basis to our stakeholders and to adapt to a rapidly changing environment and available information. Think of it as a Bayesian process — we’re constantly updating our prior!

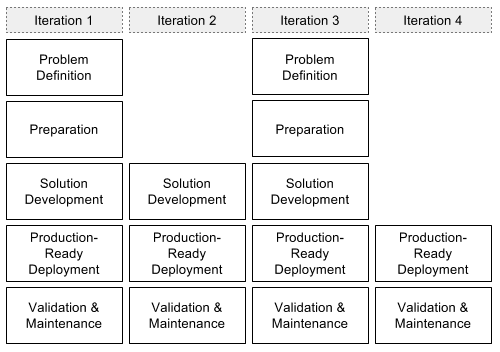

When you see the steps below, you should keep in mind that these are logical steps of the work process and that the actual workflow contains loops and iterations — an outcome of one step might require revisiting previous steps. In most cases, we expect first iterations to be as small in scope as possible and that completing steps is more important than adding complexity to steps so that we are able to deliver incremental value earlier. As such, following the process in practice should end up looking like the following diagram, as opposed to a single pass through each step once and only once.

Speaking of iterative work, this is our first iteration on capturing our data science process. We’re happy with and proud of what we have collected here, and we’re looking forward to figuring out the next steps. We want to see where the process does not fit and what changes we should make to it. There might be practices that we should add or some that we should remove. We’d also like to introduce a set of values and principles that we can use to guide our decision-making as Data Scientists.

We look forward to learning, adapting and iterating as we gain more experience as a group. Below you will find a condensed version of our Data Science process and we’d love to know what you think about it. How do you work on a Data Science task or problem? Have we forgotten any stages or important attributes of each stage? Is this something you’d like to adopt for your work? Let us know what you think, and join us in the conversation!

Data Science Process

Authors: Özgür Demir, Josh Devins, Max Jakob, Janette Lehmann, Christoph Sawade, Warren Winter

Problem Definition

At the outset, we collaboratively make a definition of the objective, ensuring that all stakeholders are on the same page about what problem we’re trying to solve. To accompany this we define the metrics to measure success, to make sure that we agree on when we are successful. This is similar to the definition of done in agile engineering practices.

- Understand the business need (in collaboration with stakeholders)

- Challenge and validate assumptions about the business needs

- Identify and prioritize subproblems, including metrics

- Narrow the scope to minimal size, in order to achieve the business goal

Preparation

Before diving into a potential solution, we take some time to first prepare ourselves and the data. We build some intuition and knowledge of the problem domain, with the support of domain experts and research. We spend some time exploring our data and finding the relevant variables or attributes of the datasets available to us. We finally define our solution space by narrowing down from all possible solutions into the general area. An example would be understanding if a supervised, binary classification is a suitable approach, or if we need a multi-label or multi-class classifier.

- Collect all available data, understand if it is sufficient and representative

- Exploratory data analysis, including any manual labeling

- Get peer feedback on the solution space and any conclusions drawn

Solution Development

Solution development is where we really dig into solving the problem at hand. We create a ranked list of candidate approaches for solving the problem, and after obtaining a simple baseline, we implement one or more prototype(s). As we go, we make sure that we have documentation of the decision-making process (e.g. in a journal of decisions), which serves as a kind of story-line supporting our chosen approaches and solutions, problems or edge-cases that we encountered, quirks with the data or a library, and so forth.

- Review prior art, literature, existing implementations, etc.

- Establish a baseline, through simple means

- Consider trade-offs such as between the goodness of fit, ease of use, complexity, maintenance, community, etc.

- Prototype solution(s), evaluating the models (e.g. accuracy, precision/recall) and operational performance (e.g. scalability, runtime performance)

- Challenge and validate assumptions that the solution solves the business problem

- Get peer feedback on solutions, implementation ideas, and evaluation procedures

Production-Ready Deployment

Once a solution has been validated through offline evaluation (where possible), we prepare and deploy a production-grade implementation. Where possible, this will be deployed as an A/B test first, or launched in “dark mode” on a subset of traffic in order to observe the effects and provide further validation of the solution.

- Understand how the solution fits into existing infrastructure

- Ensure quality standards are met for runtime performance (e.g. with SLAs/SLOs), automation, testing, documentation, code reviews, etc.

- Document how the approach has been validated in production

- Monitor quality (semantic and operational)

- Communicate results to stakeholders and broader audience (e.g. with a demo)

Validation and Maintenance

Once a solution is in production, we need to keep an eye on things and evaluate the metrics that we started with. We analyze A/B test results and regularly monitor the solution to ensure that over time, it keeps on meeting our expectations and solving our stated business problem.

- Monitor performance

- Correlate A/B test results with offline evaluation

- Regular re-training of models (understand freshness requirements)

- Cycle back to the problem statement (e.g. the problem is solved or partially solved, the problem statement needs reformulation)