Tiny Letter from Kafka

This article discusses the powerful design choice of Apache Kafka, “an open-source distributed event streaming platform,” and gives a sneak peek at how SoundCloud teams consume event data from it.

The Story of the Name

Why is Apache Kafka named after the author Franz Kafka? Frankly, this was my first thought when I began learning about Apache Kafka. After researching, I found out there’s a little story behind the name.

In a LinkedIn post from 22 November 2019, Langfei He shared the following story, which has been told and retold by many people over the years.

Franz Kafka met a young girl crying in a Berlin park after she lost her favorite doll. Kafka tried to find the doll for her, but he wasn’t successful. He asked her to meet the next day to continue looking, but when they still hadn’t found the doll, Kafka gave the girl a letter he claimed was from the doll.

It read: “Please do not cry. I have gone on a trip to see the world. I’m going to write to you about my adventures.”

And so, whenever Kafka would come back from his travels, he’d meet with the girl and read her the letters. After a few years of this, he came back with a new doll and gave it to the girl.

Upon receiving this doll, the girl said, “This doll does not look like my doll at all.”

But Kafka gave the girl a tiny letter, saying it was from the doll. The letter said, “My trips have changed me.” She was convinced and took the doll with her.

The following year, Kafka died.

Many years later, the girl found a letter inside a hidden crevice in the doll. The tiny letter said, “Everything you love is very likely to be lost, but in the end, love will return in a different way.”

I found this story amusing, not just because of how sentimental it is, but also because of how it can be used to explain the core flow of Apache Kafka.

How is that exactly?

If you want to understand why Apache Kafka is a brilliant choice of a distributed messaging system and why we should discuss it more, keep reading.

Publish–Subscribe Messaging Pattern

To understand Apache Kafka, you first must understand the publish–subscribe messaging pattern.

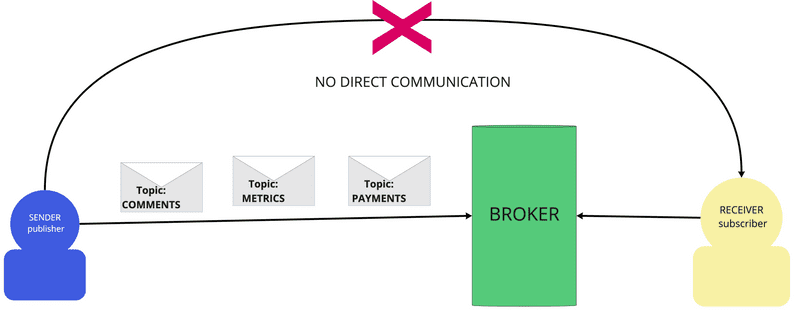

The publish–subscribe messaging pattern has a publisher and a subscriber — the publisher is a sender, and the subscriber is a receiver. The publisher doesn’t send messages directly to the subscribers; instead, it classifies each message under a certain topic without knowing if there are any subscribers to its messages. The same goes for receivers: They request a certain type of message without knowing if there are publishers.

The publish–subscribe system usually has a broker where all messages are published.

Image 1-1 illustrates how Kafka uses the publish–subscribe pattern. Senders don’t send messages directly to receivers. Instead, senders publish messages inside the broker, which is where all messages are published, just like a bulletin board. Subscribers who are interested in a certain topic will pull the messages from the brokers. Imagine a library: Authors don’t deliver their books to readers directly. Instead, readers check out the books they’re interested in.

Topic? Log? Kafka as a Database?

In Kafka, a topic is a category name that represents a stream of “related” events. For example, we can set topics like user_created, password_changed, new_track_release, user_email_confirmed, etc., and consumer applications can collect the messages from each topic to do useful things that fit our business needs.

Let’s say there’s a topic called new_track_release. Under this topic, the following events can be saved as distributed logs: “Billie Eilish’s track ‘Not My Responsibility’ was released on 26.05.2020”; “Sébastien Tellier’s track ‘Stuck in a Summer Love’ was released on 29.05.2020”; “Kehlani’s track ‘Can I’ was released on 30.07.2020.”

There’s a timestamp that corresponds to each event, and the latest record will be appended at the end of the log, and the position of the log and the contents of the logs are immutable.

There’s a saying from the Dalai Lama: “Time passes unhindered. When we make mistakes, we cannot turn the clock back and try again.” The same rule applies to Apache Kafka: Nobody can turn back time or manipulate what has happened.

Another fascinating fact about Kafka is that Kafka can function as a hybrid system between a database and a messaging system.

Kafka topics are persisted on disk, not memory, for a certain period. During this period, Kafka provides durable storage of the messages. This is called a retention policy, and the retention period can be configured by engineers as per our needs, depending on the topic. For instance, if the topic is metrics, we’d set a short retention period, as we usually don’t need the logs of the metrics for longer than a couple of weeks. On the other hand, a topic like subscription_purchase_history will need to be set to a much longer retention period because we need a user’s purchase history stored in the system for years.

In a Kafka cluster, even if the node that stores messages restarts or dies, the data won’t be lost, since data is stored across multiple servers in the cluster. This means there are multiple copies of the same data in a cluster in case one of the brokers goes down or becomes unavailable.

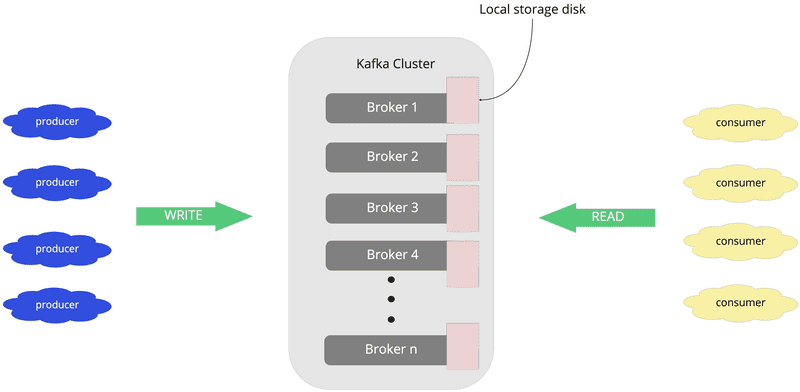

In Apache Kafka, producers and consumers are the applications you as an engineer develop, as you can see in Image 1-2 above. Producer applications write data into the Kafka cluster. Consumer applications then read the data out of the Kafka cluster and do things like generating reports; creating dashboards; sending notifications; and performing business logic, which includes making campaigns or creating another pipeline to send data to other applications.

To summarize, the architecture of Kafka is like this:

- Producers act as publishers and consumers act as subscribers.

- Thanks to the decoupling, slow consumers don’t affect producers.

- Engineers can freely add more consumers without affecting producers.

- The failure of consumers doesn’t affect the system.

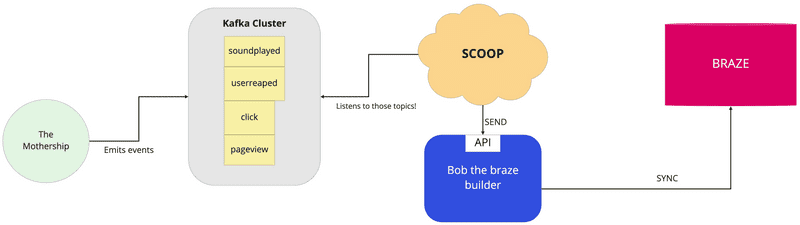

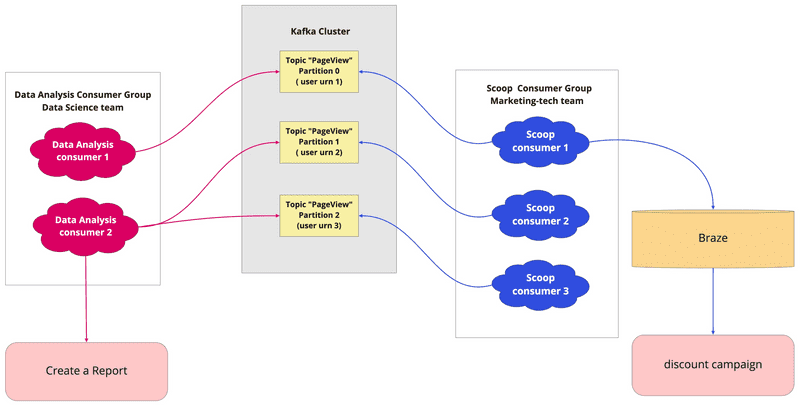

At SoundCloud — especially on my team, Marketing Tech — we own multiple consumer applications, which do interesting things by consuming topics from the Kafka cluster. As Image 1-3 illustrates, one of the consumer applications we own is called Scoop. Scoop listens to the events for topics from the Kafka cluster, such as pageviews, sound_played, user_primary_email_changed, user_registered, user_reaped, and user_email_confirmed. These events are initially emitted to the Kafka cluster from our legendary Mothership. Then, Scoop pulls the messages from the Kafka cluster and sends them to another system called Bob the Braze Builder, which acts as a proxy to sync data to our CRM tool, Braze. Based on the events persisted in Braze, our Growth Marketing Team can create personalized campaigns for each user.

Powerful Design Choice of Kafka Consumer Groups

By definition, a consumer group is a group of consumer instances that has the same group_id. A consumer group exists to read Kafka topics in parallel as a system scales up. And in this section, I’d like to demonstrate why Kafka consumer groups are such a great design choice and how we benefit from them.

The simple fact that messages don’t get destroyed after being read and committed is key in enabling different consumer groups to work independently.

How the Kafka consumer group is designed is as follows.

A consumer instance always belongs to a consumer group; even if there’s only one consumer instance, a consumer instance belongs to a default consumer group automatically. Certainly, it scales to balance to consume more partitions as the system scales up, which means in one consumer group, multiple consumer instances usually exist.

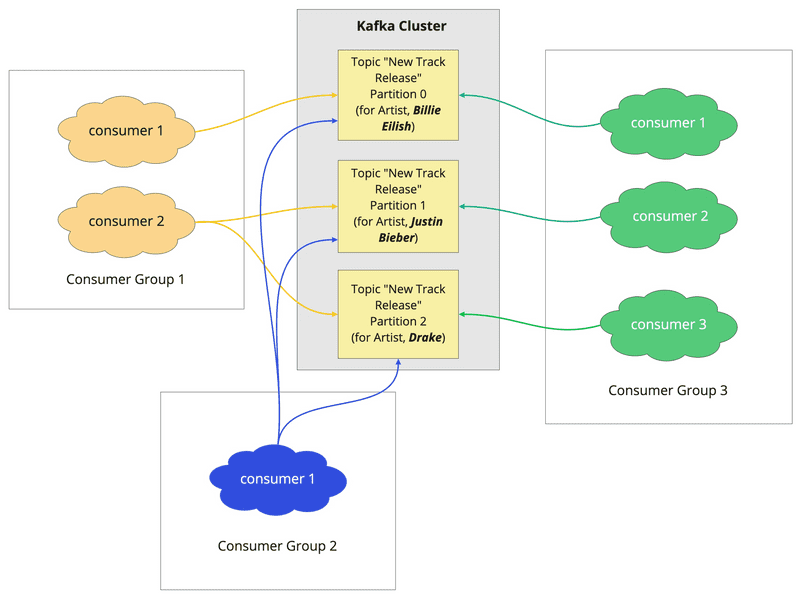

Another interesting fact is that a partition is read by only one consumer instance within the same consumer group. For example, if Partition 0 is read and committed by Consumer 1, this partition won’t be read by any other consumer instances from the same consumer group. However, Partition 0 can be read by any other consumer instances from different consumer groups. In other words, multiple reads on the same partition can be happening simultaneously or at a different time, as long as the consumer instances reading the same partition are from different consumer groups.

Remember what I said about Kafka being a database for a certain amount of time? In Kafka, messages are retained for a certain period, as long as their retention period isn’t over. Therefore, multiple consumer groups can benefit from reading the same messages during the retention period. This is one big difference compared to other messaging systems like RabbitMQ, where once a partition is read and committed, the message is removed from the queue.

Image 1-4 demonstrates that a partition gets read by one consumer instance exclusively within the same consumer group. When there’s capacity, one consumer instance can read more than one partition.

As you can see, Partition 0 isn’t read by other consumer instances from the same consumer group. However, it is read by other consumer instances from different consumer groups.

Scaling consumer instances in a consumer group is automatic. When a consumer instance dies, another instance comes up, or a different consumer instance starts to consume from the partitions that are assigned with the instance that has just died.

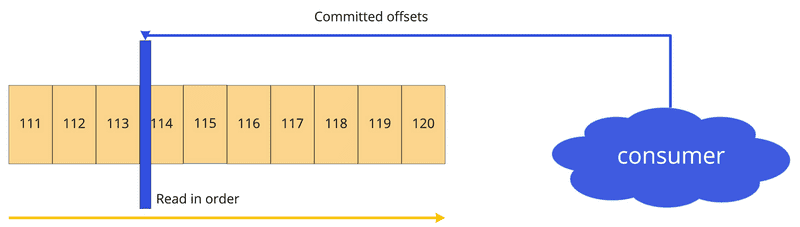

Moreover, Kafka stores the offsets where the consumer group has last read. Consider Image 1-5 below. When a consumer processes data, it commits the offsets. Even if a consumer process dies or something happens, it can read back from where it left off, thanks to the consumer offsets. When different consumers that belong to different consumer groups are reading the same partition, those consumers will probably have different offsets, because the pace of reading likely varies.

The offset numbers are generated when a message is produced and sent to a Kafka cluster, just like a page number is already created when an author publishes a book.

The nice thing here is that the ordering is always guaranteed in the same partition; it isn’t possible for consumers to read Offset120 before reading Offset119 inside Partition 0.

Let’s take a closer look at Image 1-4 one more time. You see that whenever there’s a new_track_release by Billie Eilish, the message gets written into Partition 0, Justin Bieber’s message gets written into Partition 1, and Drake’s message gets written into Partition 2. This isn’t magic. The reason behind this is that we as engineers most likely set the same key for the same artist so that the messages that belong to the same artist can be written into the same partition. This is something we can do by setting up the same key when building a producer application (a subject we won’t cover in this blog post). We’d do it this way because the ordering is guaranteed in the same partition, and it’s convenient for consumers to consume the messages in the order of events.

For instance, let’s say there are two songs released by Billie Eilish with two different timestamps.

The message, “‘Therefore I Am’ has been released on 12.11.2020” is written to the Kafka cluster before the message, “‘NDA’ has been released on 09.07.2021.” This means the message, “‘Therefore I Am’ has been released” will always have a smaller offset number than the message “‘NDA’ has been released,” as long as these messages are stored in the same partition. The consumer applications will always read the messages from the smaller offsets.

To summarize, in Apache Kafka:

- Offsets are created when a message gets written into Kafka and it corresponds to a specific message in a specific partition.

- Consumers read messages by subscribing to one or more topics and reading messages in the order in which they were produced in every partition. A consumer keeps track of its position in the stream of data by remembering what offset was already consumed.

- It’s a consumer’s responsibility to remember which position they’re at in the partition by storing offsets. By doing so, consumer applications can stop and restart without losing their position in the stream of data.

Some argue that Apache Kafka has a limit of not being able to guarantee the order across partitions. However, as long as you as an engineer set up the same partition key for the related messages, it’ll serve your needs. Usually, having an order guaranteed within a partition is enough.

Image 1-6 shows how the same partition can be read by different consumer groups and do useful things independently at SoundCloud. In this diagram, Scoop listens to a topic, pageview, which fires when a user views a subscription page. For instance, if user#1 with a Go subscription checks out the subscription page, the pageview event gets fired. When this event fires, Scoop will consume the event and sync it to our CRM tool, Braze. Then, our Growth Marketing Team can provide personalized campaigns or discount suggestions on a Go+ subscription to user#1. At the same time, our Data Science Team’s data analysis consumer group can consume the same event from the same partition and create a report to analyze user#1’s behavior. This demonstrates how Kafka messages can be consumed by multiple downstream systems to do interesting and useful things.

In the End, a Message Will Return in a Different Way

Returning to the story with Franz Kafka, remember how the little girl found the tiny letter hidden in the doll many years after Franz Kafka passed away? Now you might understand that the doll acts as a broker, and Franz Kafka himself acts as a producer, and the little girl is like a consumer.

Of course, this story is oversimplified. In reality, our engineering ecosystem mostly lives in a distributed system. It requires Kafka to be scalable, reliable, and fault tolerant.

Kafka’s choice of a publish–subscribe system and the architecture of consumer groups are quite valuable. Ultimately, Apache Kafka:

- Is a great tool for ETL and big data ingestion

- Has disk-based — not memory-based – retention

- Has a high throughput

- Is highly scalable

- Is fault tolerant

- Has fairly low latency

- Is highly configurable

- Tackles integration complexity

- Allows multiple producers and consumers

As an engineer, it’s your decision to design system architecture, data flow, and which messaging system to use for your business needs. Apache Kafka can be a useful choice if you want your event data to be retained and consumed by many other downstream systems, and if you want to fight the ever-growing complexity of integration in the future!