Service Architecture at SoundCloud — Part 1: Backends for Frontends

This article is part of a series of posts aiming to cast some light onto how service architecture has evolved at SoundCloud over the past few years, and how we’re attempting to solve some of the most difficult challenges we encountered along the way.

Backends for Frontends

SoundCloud pioneered the Backends for Frontends (BFF) architectural pattern back in 2013 while moving away from an exhausted model of an eat-your-own-dog-food approach. The exhausted model involved using a single API (the Public API) both for official applications and third-party integrations. But the need to scale operationally and organizationally led to a migration from a monolith-based architecture to a microservices architecture. The proliferation of new microservices, paired with the introduction of a private/internal API for the monolith (effectively turning the monolith into yet another microservice), opened the door for new and innovative dedicated APIs to power our frontends. Thus, BFF was born, and it was really exciting, as it enabled autonomy for teams — along with many other advantages that will be discussed shortly.

In a nutshell, BFF is an architectural pattern that involves creating multiple, dedicated API gateways for each device or interface type, with the goal of optimizing each API for its particular use case.

Plenty has been written about BFF, the theoretical advantages that it provides, and how it compares to other approaches and technologies like centralized API gateways and GraphQL. However, little can be found on real-life experiences, risks, and tradeoffs encountered along the way, so we decided to write this series to shed some light on these topics.

BFFs at SoundCloud in 2021

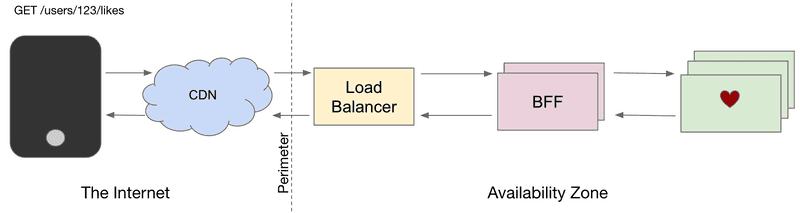

SoundCloud operates dozens of BFFs, each powering a dedicated API. BFFs provide API gateway responsibilities, including rate limiting, authentication, header sanitization, and cache control. All external traffic entering our data centers is processed by one of our BFFs. Combined, they handle hundreds of millions of requests per hour.

BFFs make use of an internal library providing edge capabilities, as well as extension points for custom behavior. New library releases are semi-automatically rolled out to all BFFs within hours.

Some examples of BFF include our Mobile API (powering Android and iOS clients), our Web API (powering our web frontends and widget), and our Public and Partner APIs.

BFFs are maintained using an inner source model, in which individual teams contribute changes, and a Core team reviews and approves changes based on principles discussed in a collective. The Collective, organized by a Platform Lead, meets regularly to discuss issues and share knowledge.

The Good

One of the key advantages BFFs provide is autonomy. By having separate APIs per client type, we can optimize our APIs for whatever is convenient for each client type without the need for synchronization points and difficult compromises. For example, our mobile clients tend to prefer larger responses with a high number of embedded entities as a way to minimize the number of requests and to leverage internal caches, while our web frontend prefers finer-grained responses and dynamic augmentation of representations.

Another advantage of BFFs is resilience. A bad deploy might bring down an entire BFF in an availability zone, but it shouldn’t bring down the entire platform. This is in addition to many other resilience mechanisms in place.

Additionally, high autonomy and lower risk lead to a high pace of development. Our main BFFs are deployed multiple times per day and receive contributions from all over the engineering organization.

Finally, BFFs enable the implementation of sometimes ugly but necessary workarounds and mitigation strategies (a client bug fix affecting specific versions) without affecting the overall complexity of the platform.

The Bad

BFFs provide many advantages, but they can also be a source of problems if they’re not part of a broader service architecture that’s able to keep complexity and duplication at bay.

In service architectures with very small microservices that do little more than CRUD, and with no intermediate layers between these microservices and BFFs, feature integration (with all the associated business logic) tends to end up in the BFFs themselves. Although this problem also exists with other models, like centralized API gateways, it’s particularly problematic in architectures with multiple BFFs, since this logic can end up duplicated multiple times, with diverging and inconsistent implementations that drift apart over time.

This issue becomes critical for authorization rules that can only be applied at integration time (for example, because the necessary pieces of information required to make a decision are spread across multiple microservices). This model obviously doesn’t scale with the addition of more and more BFFs.

At SoundCloud, this problem manifested as the Track and Playlist core entities grew and were decomposed into multiple microservices serving parts of the final representations assembled in each of the BFFs. Suddenly, the authorization logic needed to be moved to the point of integration, which, at the time, was the BFF. This was not too concerning at first, with just a handful of BFFs and very simple authorization logic, but as the logic grew in complexity and the number of BFFs increased, it caused many problems. This will be the focus of the next posts in this series.

The Ugly

Effective operation of multiple BFFs requires a set of platform-wide capabilities that, in their absence, might lead to an unnecessary proliferation of BFFs. For example, application entitlements (in addition to user entitlements) are needed to restrict access to certain applications and third-party integrations to specific endpoints. In their absence, it’s tempting to spawn an entire new BFF for narrow use cases with specific access control requirements. There needs to be a strategy to decide how many BFFs are too many and when to create one versus when to reuse an existing one. Even though BFFs are designed to provide autonomy, there’s a tradeoff between autonomy and added maintenance and operational overhead that needs to be carefully managed.

We’ve also seen a tendency to push complex client-side logic to the BFF. This stems from the initial idea that a BFF is an extension of the client, and that therefore it should be treated as “the backend side of the client.” This has worked well in some cases, but in others it has led to problems. For example, pushing pagination to the server (recursively paginating to return an entire collection in one single request) — even though faster for basic use cases — can lead to timeouts, restrictive limits for collection sizes, and fan-out storms that may bring the entire system down.

Although BFFs enable some form of autonomy, it’s also important to recognize that BFFs are at the intersection of two worlds, and the idea of full autonomy for client developers is just an illusion. Extensive collaboration between frontend and backend engineers is required to ensure optimal API designs that are convenient for client developers to use, in addition to being optimized for distributed environments and their intricacies.

Summary

Backends for Frontends is an architectural pattern that can lead to a high degree of autonomy and pace of development. Like all engineering decisions, it comes with a set of tradeoffs that must be well understood and managed. In particular, a good service architecture is critical for scalability, security, and maintainability, and there are limits to how much autonomy can be achieved.

In future posts, we’ll dive into some of the unintended consequences of using the BFF pattern and discuss how our service architecture has evolved to address them. Stay tuned!