Using Kubernetes Pod Metadata to Improve Zipkin Traces

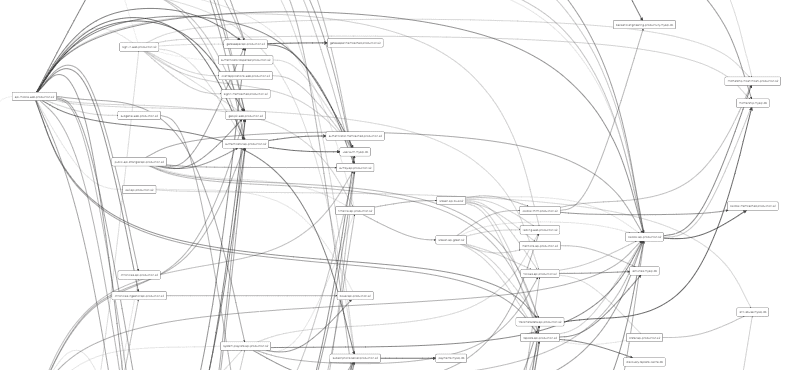

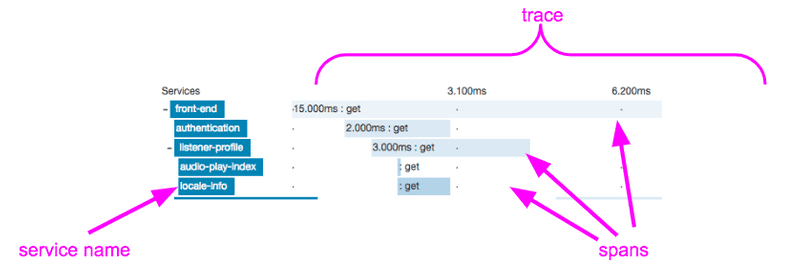

SoundCloud is built on hundreds of microservices. This creates many challenges, among them debugging latency issues across the services engaged in the completion of a single request. As such, it’s natural that we were early adopters of Zipkin, a distributed tracing tool that emerged from Twitter, which was built in part to help engineers tackle these sorts of problems.

In recent years, we’ve switched to using Kubernetes as our container deployment platform. Kubernetes makes it easy to break a microservice down into narrowly focused operational responsibilities, which often correspond to different workloads the service must handle and are typically expressed in Kubernetes as distinct pod templates.

Because each Kubernetes pod is assigned its own IP address, and because each pod has a well-defined responsibility, it’s possible to make higher-level assumptions about an IP address given the easy access to data that Kubernetes accumulates across deploys. This is in contrast to the pre-Kubernetes era at SoundCloud, when an IP address referring to a “bare-metal” machine would likely have hosted several very different services.

The BEEP team1 here at SoundCloud recently revisited our Zipkin setup, primarily to make two improvements: to increase the quality and accuracy of service names in Zipkin, and to enhance Zipkin span data.

Updating Zipkin service names across our hundreds of services in concert with a constantly evolving backend architecture has been a particular challenge. This is because Zipkin uses service names to represent how one service relates to another, and without good, consistent service names, the value of Zipkin as a visualization or debugging tool is greatly reduced.

Defining Zipkin Service Names in One Central Place

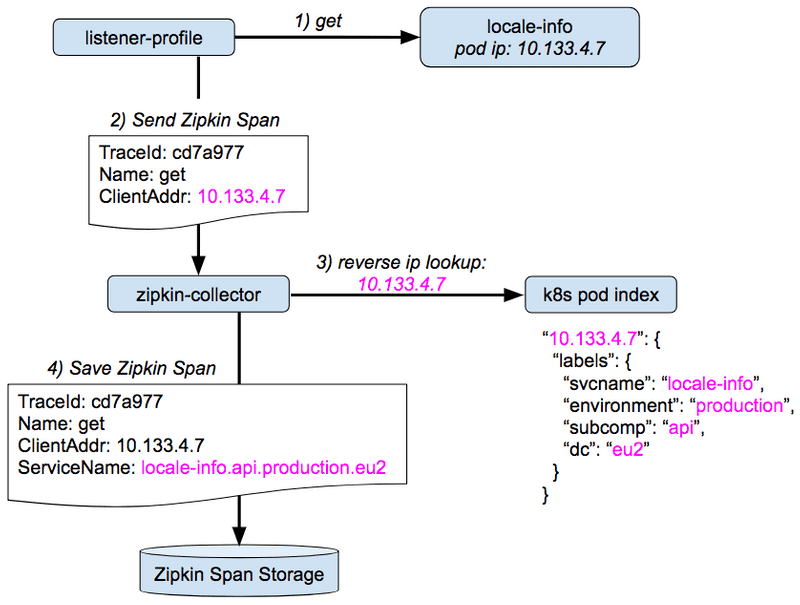

In a service-to-service interaction, the caller service reports the IP address of the callee service’s pod as part of the span data it sends to Zipkin. After brainstorming, we realized we could customize the Zipkin collector to transform the span on the fly, performing a quick reverse IP lookup of the Kubernetes pod metadata and replacing the caller’s version of the Zipkin service name with one assembled from the callee’s pod labeling:

The result was an across-the-board improvement in both Zipkin service names and dependency graph quality.

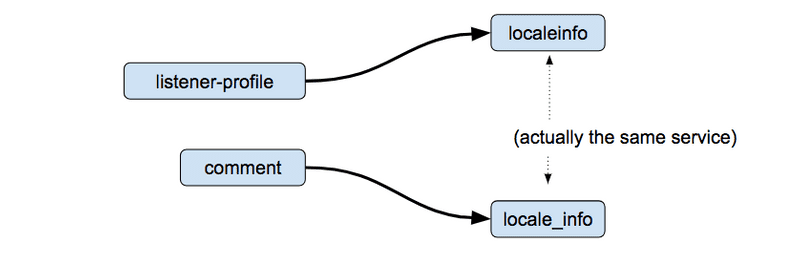

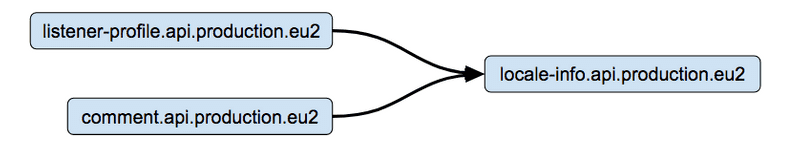

As a side effect of this approach, control over how a node is represented in Zipkin switched from the caller services to the callee service:

The team that owns the locale-info service makes the decision, for all teams, how that service is named, thus removing the need for multiple teams to coordinate on establishing or changing the service name. And the original Zipkin service name given by the caller is not lost — we add it as a span annotation.

Useful Span Annotations from Pod Metadata

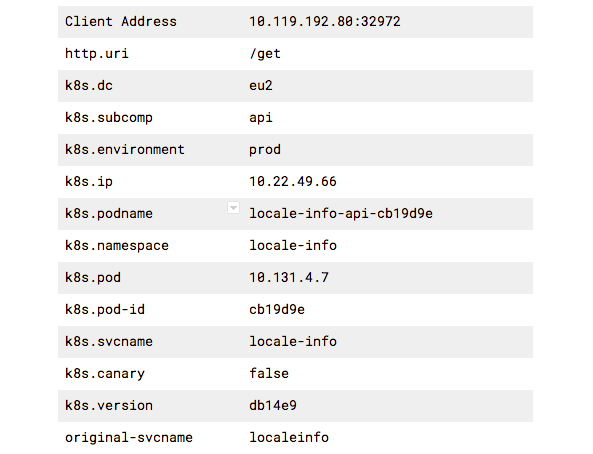

Zipkin span collection generates significant reverse-lookup load, but our integration hits a simple, very fast Kubernetes metadata caching service written by the SoundCloud Production Engineering team. Responses from this service contain a rich set of pod metadata that goes beyond what’s merely necessary to assemble a good Zipkin service name, and we add all of this data as extra annotations on the Zipkin span.

The result is that when looking into a latency problem, we can not only see basic Zipkin information about how long a service took to respond, but we also know details like the Git revision of the service code that was running on the particular pod, because we consistently set that as a Kubernetes label on all pods. This is a level up in an engineer’s ability to efficiently pinpoint production problems.

Our approach involves inserting a small amount of logic into the Zipkin collector and then leveraging our internal Kubernetes pod labeling scheme to improve span data. The result is normalized and modernized service names, along with greatly enhanced details embedded in Zipkin traces that engineers can use to troubleshoot production issues.

Value-added span transformation ideas are getting more attention in the Zipkin community. The OpenZipkin team has a collector extension framework in the works, and we’re excited to see what other Zipkin “mashups” come out of the Zipkin community. If you’re interested, please join the OpenZipkin gitter discussion.

Thank You

- Benjamin Debeerst, SoundCloud Backend Engineering Productivity Team

- Kristof Adriaenssens, Engineering Manager, SoundCloud Content Team

- René Treffer, SoundCloud Production Engineering Team

- Tobias Schmidt, SoundCloud Production Engineering Team

- Adrian Cole, Pivotal Spring Cloud Team and prominent Zipkin contributor

Additional Reading

1: The Backend Engineering Productivity team’s mission is to help solve common, cross-cutting engineering problems that our product-focused engineers face. We also build and maintain many of SoundCloud’s “core” services.