How SoundCloud Uses HAProxy with Kubernetes for User-Facing Traffic

A little less than two years ago, SoundCloud began the journey of replacing our homegrown deployment platform, Bazooka, with Kubernetes. Kubernetes automates deployment, scaling, and management of containerized applications.

The Problem

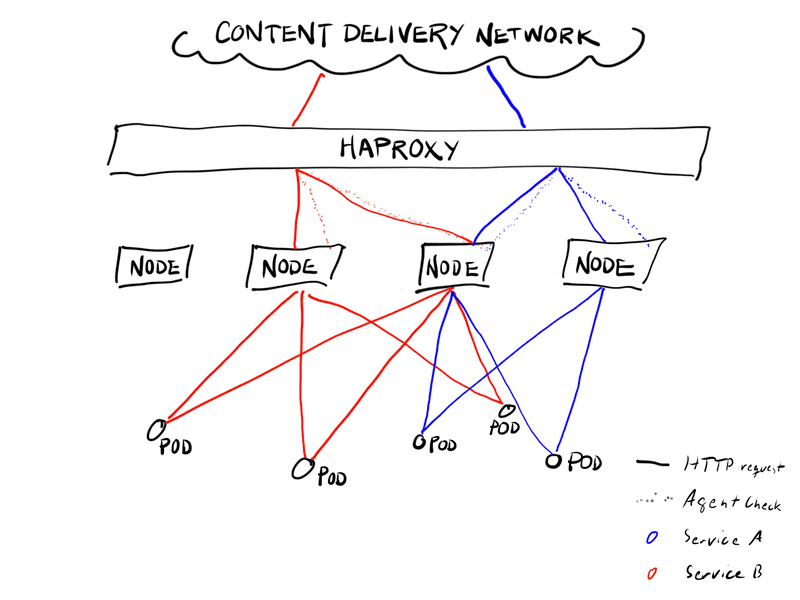

An ongoing challenge with dynamic platforms like Kubernetes is routing user traffic — more specifically, routing API requests and website visits from our users to the individual pods running in Kubernetes.

Most of SoundCloud runs in a physical environment, so we can’t leverage the built-in support for cloud load balancers in Kubernetes. At the edge of our infrastructure, a fleet of HAProxy servers terminates SSL connections and, based on simple rules, forwards traffic to various internal services. The configuration for these servers is generated and tested separately before it is deployed to these terminators. But because there are a lot of safeguards built in, this process takes a long time to complete, and it cannot keep up with the rate at which pods in Kubernetes are moving around. The fundamental challenge of getting user traffic to the pods that keep moving around is a mismatch between our static termination layer and the highly dynamic nature of Kubernetes.

The Process

To address this challenge, we initially configured the terminator layer to forward HTTP requests to a separate HAProxy-based Ingress controller, but this solution did not work well for us, as the Ingress controller was intended for low-volume internal traffic and is not very reliable.

Our users generate a lot of traffic, and every problem in the traffic stack means that SoundCloud is not working for someone. Between the Kubernetes Ingress and the terminator configuration, we had two layers of Layer 7 routing that needed to match up and often didn’t. This was frustrating for us as developers and resulted in additional work for us. We also knew that the Ingress controller would not be able to handle the long-lived connections used by some of our clients.

When SoundCloud engineers build applications, we use a custom command-line interface that generates the Namespace, Service, and Deployment. Optionally, it generates the Ingress Kubernetes objects from command-line flags. We added a flag to the tool that generates these objects, which changes the Service to the NodePort type.

Kubernetes allocates a port number that is not yet used in the cluster to this service, and it opens this port on every node in the cluster. Connections to this port on any of the nodes are forwarded to one of the instances for this service. (When we generate the Kubernetes objects, there is a one-to-one correspondence between Service and Deployment objects. For the sake of brevity, we’ll gloss over the details of ReplicaSet, Pod, and Endpoints objects in Kubernetes here.)

Note that this is irreversible for a given Service — Kubernetes does not allow removing the node port from a service. We are still looking for a solution for this — thus far, this has only happened early enough in the service lifecycle that we could delete and recreate the service, but this could lead to service interruptions later on when the service is used in production.

To configure their applications to receive traffic from the internet, application developers declare the public hostname and path, as well as the cluster, namespace, service, and port name for the applications. The systems routing public traffic to applications, such as SSL terminators, CDN distributions, and DNS entries, are configured based on this declaration.

The Implementation

When the terminator configuration is generated, the script queries the Kubernetes cluster to find the assigned node port for each service, along with the list of Kubernetes nodes. Originally, we added all nodes into the terminator configuration, but this turned out to be a problem.

Each terminator independently checks the health of each port on each node. With several dozen terminators and hundreds of Kubernetes nodes, this amounted to tens of thousands of health checks being generated every second. Because of the indirection through the nodes, this was not affected by the size of the service itself, so even services that would normally get very little traffic needed a lot of resources just to be able to satisfy these health checks.

We needed to reduce the number of nodes configured for each service, but we also did not want to send all the traffic through only a limited set of nodes to avoid them becoming a scaling bottleneck. One possible solution would be to simply pick a number of nodes out of the list at random, but this would mean that the configuration completely changes every time it is generated, obscuring the real differences.

Instead, we decided to use rendezvous hashing between the service name and the node address to pick a fixed number of servers per backend. With this method, a different set of nodes are selected for each service, but the selection is always the same as long as the nodes do not change.

We chose a high enough number of nodes so that we didn’t need to worry about one or two nodes going away or the nodes overlapping between different high-traffic services.

To replace a node, we simply restart the pipeline that generates and deploys the terminator configuration. This takes several hours, but it is fully automated. Because each service is routed through a limited number of nodes, we have to take care not to take too many nodes out of service at once. This means we can only replace a limited number of Kubernetes nodes per day, but as of yet, it hasn’t been a problem.

The Node Agent

During short-term maintenance, such as when rebooting nodes for kernel upgrades, we needed the ability to gracefully drain a node. We wrote an agent for the HAProxy agent-check protocol that listens on a fixed port on every node. For the sake of simplicity, we decided to always remove traffic and pods at the same time. Now, when the node is cordoned in Kubernetes, preventing new pods from being scheduled onto it, the agent updates the HAProxy server state to shift traffic away from this node.

From time to time, we also need to shift traffic between different deployments of a service on the same cluster or between clusters. To support this, we extended the agent. By adding some annotations on the Kubernetes Service object, we can instruct it to open another per-service agent-check port that includes not only the node maintenance status, but also the desired weight of this service.

The terminator configuration generation picks this up and configures the agent check accordingly. Application developers can now add multiple Kubernetes backends to any public host and path. For each backend, we select a number of nodes using rendezvous hashing as before and combine the results. By changing the Service annotations, the weight for each backend can then be changed within seconds.

The Future

We are quite happy with the NodePort-based routing, but there are some caveats.

For one, the load distribution between the instances of an application is not very even, but we compensate by slightly over-provisioning the corresponding apps. At this point, the cost of the increased resource usage is minor compared to the cost of engineering a better load balancing mechanism.

Additionally, operating Kubernetes in physical data centers is a challenge. There are standardized solutions for most use cases on cloud providers, but each data center is slightly different — especially if you are not starting from scratch, but rather integrating Kubernetes into an existing infrastructure.

In this blog post, we described how we solved one particular problem for our particular situation. What’s next? The questions we are thinking about currently revolve around having multiple Kubernetes clusters in different locations, and determining how to route traffic to them in a way that results in each user having the best experience possible.

How have you solved this problem? We would love to hear how you run Kubernetes. Leave a comment below!