Breaking Loose from Third-Party Lock-In with Custom Refactoring Tools

Code refactoring is an essential part of the job of software developers. As time goes on, technology evolves, product requirements change, and new features are built into a codebase. These things have the potential to negatively impact both the codebase’s quality and its capacity to be extended to retain its value for as long as possible. In addition to refactoring, it’s also part of our job to timely detect when changes in our code are necessary, so as to avoid code becoming too complex or growing to unmanageable levels.

However, even though it’s essential, refactoring can at times be intimidating, and introducing improvements to a codebase without affecting its behavior can impose huge challenges on software developers.

Deprecating Specta

At SoundCloud, we create all our new features in our iOS app using Swift, but since our codebase was started several years ago, we still have a significant amount of Objective-C code to maintain (including third-party libraries). Years ago, before adopting Swift, we decided to use Specta, “A light-weight TDD / BDD framework for Objective-C,” to write our unit tests. It brought us a lot of value for a long time, but around two years ago, after experiencing issues like not being able to run a single test without the entire test suite being run, and being unhappy with Xcode’s support for Specta, we decided to deprecate it.

From the moment of deprecation onward, we decided to write all our new tests using plain XCTests, but we were still locked in to using Specta, as we had more than 900 spec files in our codebase.

The number of specs in our project was not negligible, and manually transforming those specs into XCTests was a very tedious, error-prone, and time-consuming task; even though we would have loved to transform all the specs into XCTest at once, it would have taken too much time to be viable. Given this interesting challenge, I decided to take advantage of one of the benefits of working at SoundCloud and set out to find a way to automate the transformation of these specs during my self-allocated time (SAT). Luckily, the project succeeded and Specta is no longer part of our codebase. So in this post, I’ll cover how it was done.

Specta vs. XCTest

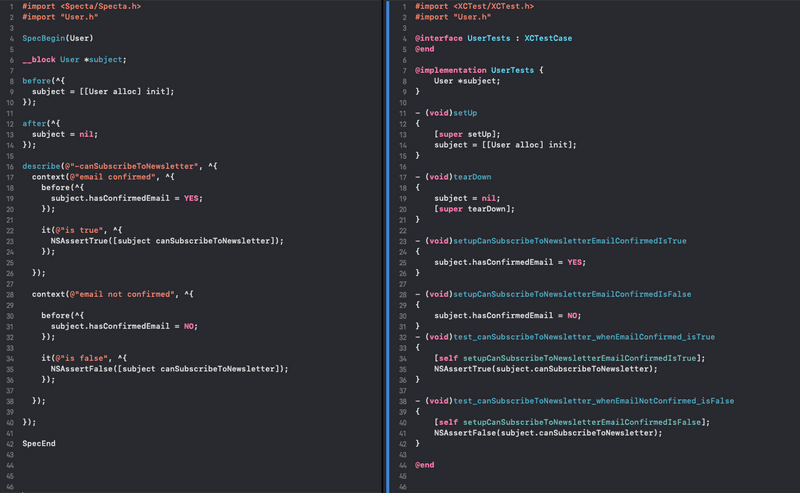

Let’s start by comparing how tests are structured in both Specta and XCTest. Due to Specta’s domain-specific language (DSL), you can structure your tests in complex ways. This can be a blessing if you’re trying to avoid duplication and a curse if it’s taken too far, as it makes specs hard to navigate with all the nesting. For the sake of this blog post, we’ll use a rather simple spec to demonstrate the work done.

The image below shows how testing the same simple User class is done using both formats:

Test Declaration

In Specta, tests are declared with the use of the SpecBegin and SpecEnd macros, while in XCTest, tests are classes that inherit from the XCTestCase class.

Scope of Variables and Properties

In Specta, the scope of variables is determined by the enclosing scope they’re declared in. The Specta DSL works by using functions that get passed blocks, where every block defines a new context. To make variables mutable from within blocks nested at a deeper level, they need to be declared with the __block keyword. In XCTest, member properties or ivars are declared to make them accessible by methods, just like a regular Objective-C class.

Setup and Teardown

When writing tests, we need to prepare the initial state before our tests run and clean it up after they complete. In XCTest, XCTestCase provides class and instance methods that can be overridden to set and clean the state. In Specta, the before and after methods serve the same purpose.

Test Methods

In XCTest, test methods need to comply with a set of conventions in order for Xcode to find and execute them. As per Apple documentation, “a test method is an instance method on an XCTestCase subclass, with no parameters, no return value, and a name that begins with the lowercase word test.” When using Specta, we call the it function and pass the block that contains the assertions for our test (specs are processed at runtime to dynamically generate test classes that comply with these conventions, which makes it possible for Xcode to execute them).

From Specta to XCTest

Knowing how these two ways of writing tests differ, I figured that to systematically transform a Specta spec into an XCTestCase class, we needed to do the following:

- Find top-level calls to the

beforeandaftermethods and use them to generatesetUpandtearDownmethods. - Find calls to the

itmethod and generate test methods from them. - Find calls to the

beforemethod that are within a context and generate setup methods from them that can be called from test methods to set the initial state as needed.

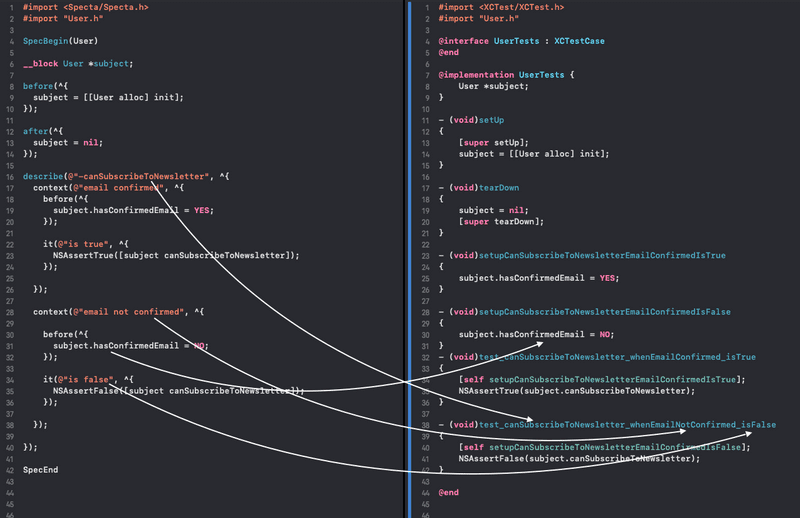

In order to generate intuitive names for our test methods, the context names needed to be extracted from calls to the describe and context functions and then processed to make them valid Objective-C method names. In the same manner, calls to before and after methods from within the associated contexts needed to be detected to make sure the state was being prepared accordingly before the tests were run. If this sounds confusing, the image below might give you a better idea of how elements from the spec were translated into XCTest test methods.

Code transformations can be done in multiple ways, and depending on the problem, one tool might be better than another. Some of the options I considered were:

- Text replacement using regular expressions.

- Refactoring features available in Xcode.

- Low-level libraries that operate on the abstract syntax tree (AST).

While possible, using regular expressions to perform the type of source transformations described above would have been incredibly cumbersome and error-prone. The available Xcode features for refactoring were also not advanced enough for the task at hand, so I was left with option three. I started investigating different alternatives that allowed me to operate on the AST. Fortunately, I found LibTooling, which provides a way to conveniently analyze the abstract syntax tree using a set of high-level abstractions to match against sections of the code you’re interested in. In the following sections, I’ll expand on what LibTooling is and how I used it.

LLVM’s LibTooling

LibTooling is a library you can use to create your own standalone tools based on the Clang compiler. The LLVM compiler takes our code through three different stages to create the executable:

- The frontend stage takes the code through the lexical, syntactic, and semantic analysis phases to generate the IR (intermediate representation) that is used as an input for the subsequent phases.

- The middle-end stage applies target-independent optimizations — like the removal of useless or unreachable code — on the IR.

- Finally, the backend stage converts the IR into machine code to generate the target program.

In order to efficiently perform a source transformation, we need to be able to operate on the AST, which means we need to somehow be able to tap into the compiler’s frontend to have it generate the AST for our source and later be able to analyze it. Fortunately, this is exactly what LibTooling’s frontend actions do!

ASTMatchFinder

ASTMatchFinder is a frontend action class that can be used to match against the AST. To better explain what is meant by “matching against the AST,” I’ll provide a concrete example.

Consider the following function:

int f(int x) {

int result = (x / 42);

return result;

}Now let’s see what its AST looks like:

`-FunctionDecl 0x5aeab50 <test.cc:1:1, line:4:1> f 'int (int)'

|-ParmVarDecl 0x5aeaa90 <line:1:7, col:11> x 'int'

`-CompoundStmt 0x5aead88 <col:14, line:4:1>

|-DeclStmt 0x5aead10 <line:2:3, col:24>

| `-VarDecl 0x5aeac10 <col:3, col:23> result 'int'

| `-ParenExpr 0x5aeacf0 <col:16, col:23> 'int'

| `-BinaryOperator 0x5aeacc8 <col:17, col:21> 'int' '/'

| |-ImplicitCastExpr 0x5aeacb0 <col:17> 'int' <LValueToRValue>

| | `-DeclRefExpr 0x5aeac68 <col:17> 'int' lvalue ParmVar 0x5aeaa90 'x' 'int'

| `-IntegerLiteral 0x5aeac90 <col:21> 'int' 42

`-ReturnStmt 0x5aead68 <line:3:3, col:10>

`-ImplicitCastExpr 0x5aead50 <col:10> 'int' <LValueToRValue>

`-DeclRefExpr 0x5aead28 <col:10> 'int' lvalue Var 0x5aeac10 'result' 'int'The AST shows what type of nodes are generated when compiling the function above. With MatchFinder, you can attach instances of AstMatcher to selectively match nodes of this AST. Some of the matchers we can use are callExpr, declStmt, functionDecl, and CompoundStmt. For more matchers, have a look at the reference site.

Refactoring with ASTMatchers

As described in the section above, ASTMatchers help us easily identify the presence of specific nodes in our source code. Once we’ve identified the nodes, we can also find out their specific locations. This allows us to analyze our spec source code and extract the sections we’re interested in. Once extracted, we can use the output to build a new source — in this case, our XCTestCase class. Let’s look at some of the ASTMatchers I created for matching against parts of a Specta spec. The matcher used to match against the it functions looks as follows:

const auto ItExprMatcher = callExpr(callee(functionDecl(hasName("it"))),

hasArgument(0, hasDescendant(stringLiteral().bind("testName"))),

hasArgument(1, blockExpr().bind("block"))

).bind("itExpr");We can see how the DSL allows us to express in a very natural way that we want to find a call expression, where the callee is a function with the name it. The function should have two arguments — the first one being of type string literal, and the second being a block expression. Also, notice the calls to the bind function; as we’ll see in a moment, calling this function allows us to refer to specific nodes via the names that are passed to it. Let’s look at a more complex example to better illustrate what ASTMatchers can do:

const auto TopLevelBeforeExpr = callExpr(

callee(functionDecl(hasName("before"))),

hasArgument(0, blockExpr().bind("beforeExpr")),

unless(hasAncestor(callExpr(callee(functionDecl(anyOf(hasName("describe"), hasName("context")))))))

).bind("topLevelBeforeExpr");As mentioned before, we need to distinguish between calls to before that are within a context and those that are top-level ones. This is necessary in order to produce setup methods that will set the initial state according to our tests. To identify top-level before calls, we can add a condition to our matcher where it’ll accept calls to functions with the name “before” that take a block expression as the first argument as long as they have an ancestor within a context or describe expression.

Handling Matches

Every time we register a matcher in our MatchFinder, we also need to pass an object that implements the MatchCallback abstract class. This class declares the following methods:

class MatchCallback {

public:

virtual ~MatchCallback();

virtual void run(const MatchResult &Result) = 0;

virtual void onStartOfTranslationUnit() {}

virtual void onEndOfTranslationUnit() {}

};As their names suggest, the StartOfTranslationUnit and onEndOfTranslationUnit methods are called at the start and end of processing a translation unit (source file). More importantly, the run method is the one that will be called whenever a match is detected. In order to know what type we need to process, we can check every result for nodes we’re trying to match using the type of node and the string we passed to the bind function, like this:

void ASTHandler::run(const MatchFinder::MatchResult &Result) {

if (Result.Nodes.getNodeAs<clang::Expr>("itExpr")) {

...

}

}Here we’re checking if the result we got contains a node of type Expr that’s bound to the itExpr identifier. Once we have identified the type of result, we can get more details from it — for instance, when we match a call to the it function, we want to be able to extract the first parameter in order to use it to generate our XCTestCase method. We can extract this string as follows:

if (Result.Nodes.getNodeAs<clang::Expr>("itExprWrapper")) {

const auto itText = Result.Nodes.getNodeAs<clang::StringLiteral>("testName")->getString().str();

}Unique Identifiers

In order to identify sibling nodes after extracting the code from the AST, I needed to mark nodes in a way that allowed me to identify the ones that shared the same parent. For this, I created a helper function that generates a unique ID based on the beginning and end location of a node:

string Utils::getIdForNode(const std::string key, const MatchFinder::MatchResult Result) {

const clang::Stmt *node = Result.Nodes.getNodeAs<clang::Stmt>(key);

const auto beginLoc = node->getBeginLoc();

const auto endLoc = node->getEndLoc();

return (std::to_string(Result.SourceManager->getFileOffset(beginLoc)) + ":" + std::to_string(Result.SourceManager->getFileOffset(endLoc)));

}Matching Preprocessors

In addition to matching nodes in the AST, I also needed to handle preprocessors in order to find inclusion directives that needed to be copied over to the XCTest output file. LibTooling provides the PreprocessOnlyAction class, which allows us to attach preprocess actions capable of matching against preprocessors like inclusion and pragma directives, among others.

Collecting Results

To make things simpler for me given my limited C++ experience, I decided to parse the match results into JSON and do the rest of the work to generate the XCTest source using a different set of tools. The example below shows the resulting JSON for the previously presented User sample spec:

Sample json result

{

"afters": [

{

"body": "\n subject = nil;\n",

"id": "314:341"

}

],

"befores": [

{

"body": "\n subject = [[User alloc] init];\n",

"id": "266:310"

},

{

"body": "\n subject.hasConfirmedEmail = NO;\n ",

"id": "424:477",

"parentId": "389:579"

},

{

"body": "\n subject.hasConfirmedEmail = YES;\n ",

"id": "629:683",

"parentId": "585:787"

}

],

"contexts": [

{

"id": "389:579",

"isSharedExample": false,

"name": "email confirmed",

"parentId": "345:794"

},

{

"id": "585:787",

"isSharedExample": false,

"name": "email not confirmed",

"parentId": "345:794"

},

{

"id": "345:794",

"isSharedExample": false,

"name": "-canSubscribeToNewsletter"

}

],

"includes": [],

"its": [

{

"body": "\n NSAssertTrue([subject canSubscribeToNewsletter]);\n ",

"id": "489:568",

"name": "is true",

"parentId": "389:579"

},

{

"body": "\n NSAssertFalse([subject canSubscribeToNewsletter]);\n ",

"id": "695:776",

"name": "is false",

"parentId": "585:787"

}

],

"spec-name": "UserSpec",

"vars": [

{

"body": "__block User *subject",

"id": "0:263"

}

]

}Interpreting JSON Results

To correctly generate an XCTest test from a spec, we needed to perform multiple tasks on matched nodes in order to get valid Objective-C code that Xcode would be able to execute. For the sake of convenience, I decided to do the rest of the work in Ruby, given the vast number of gems — like Thor and activesupport — available to create command-line tools and easily manipulate strings.

Generating an XCTestCase Class

It’d make for an excessively long post to go over all the tasks involved in the transformation process whose main purpose is to replicate the flow of control of a spec. So instead, here’s a list of the most relevant ones I had to perform on the resulting JSON file to generate the XCTestCase class:

- Generate the

XCTestCaseskeleton class. - Copy over the inclusion directives.

- Copy the variable declarations and handle potential duplicates coming from different contexts of the same spec.

- Generate a main setup method that overrides

XCTestCase’ssetUpmethod and other setup methods specific to every test from the elements insidebeforesin the JSON file. - Generate a teardown method from the spec’s

aftercalls. - Generate test methods with names computed according to the context they’re in and insert calls to the corresponding setup methods to configure the state before running the validations.

- Format the output using

clang-format.

To take care of these requirements, I created multiple parsers for processing the JSON and outputting different parts of the XCTest test. The resulting tool is a Ruby gem that:

- Executes the parser based on LibTooling that generates the JSON file.

- Calls all different parsers to generate the

XCTestCaseclass and merges their results together. - Formats the resulting

XCTestCaseclass usingclang-format.

Results

Once the tool was created, transforming a spec into XCTest went from taking hours to taking only a couple of minutes. This allowed us to transform our 900+ specs with confidence and ease. The last spec was recently transformed and we were consequently able to remove Specta from our codebase and free ourselves from this third-party coupling, making the project a total success! 🎉

But as is the case with everything, it wasn’t all perfect. So here are some points to keep in mind if you are considering building a refactoring tool with LibTooling:

- Poor documentation — The documentation on how to create these tools is limited, and on top of that, the documentation available is often outdated since the C++ API offers no API stability guarantees.

- Edge cases — Even after having created the

ASTMatchers to match the specs, we had to go back to and expand them to support edge cases we hadn’t detected before. This is natural when refactoring so many different sources — especially when they’re written in a language that allows for equivalent expressions to be written in several different ways. - Missing matchers — Despite the large number of matchers available, sometimes you might want to match something a matcher is not available for, forcing you to write your own custom matcher.

- Working on C++ — It depends on your level of writing and reading C++, but if, like me, you’re used to working with more high-level languages, using C++ to write a refactoring tool might feel like adding complexity to a task that can, by itself, be quite daunting.

Conclusion

Creating your own refactoring tools requires an upfront work investment, and whether or not this investment is justified depends on each case. For the right situations, building a refactoring tool that is tailored to your problem can prove to be a high-return investment. For us, we went from needing hours of tedious work to transform a spec to being able to just run a command and get the output instantly! This type of automation results in freed-up resources for other tasks like building new features, which is a huge benefit for companies dealing with large amounts of legacy code.