Hands-Off Deployment with Canary

At SoundCloud, we follow best practices around continuous delivery, i.e. deploying small incremental changes often (many times a day). In order to do this confidently and reliably, we verify these changes before releasing, using different measures including, among others, unit, integration, and contract tests.

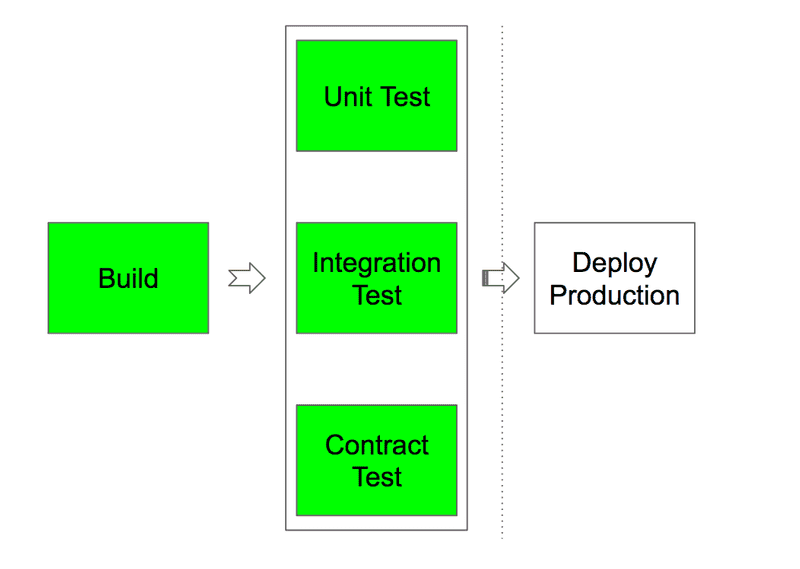

This process is automated as part of a continuous delivery (CD) pipeline. Historically, a typical CD pipeline here has contained at least the following steps:

After building and packaging the code, a battery of automated tests was run in order to verify the expected behavior. Once all the tests passed, deployment to production could be manually triggered.

This automated process, together with code reviews and other internal measures, greatly reduced the risk of introducing regressions.

However, even with precautions like those mentioned above in place, things eventually can (and will) go wrong. In order to detect production issues, we use monitoring and alerting, so when a problem is found in production, we can react by reverting to a well-known previous state. Even so, since the change has already been fully rolled out, the user experience has already been severely impacted.

In order to improve the user experience, we have been exploring different ways of reducing the impact and the Mean Time to Recovery (MTTR) of faulty deployments. Enter canary releases…

Iteration 1: Manual Canary Release

The term “canary” originates from a mining tradition from the early 20th century, consisting of canaries being brought down to coal mines in order to detect toxic gases. When a canary died, it alerted workers to carbon monoxide or other poisons in the air, allowing them to evacuate before they were affected.

In the context of software development, canary release is a technique that consists of making release candidates available to a small subset of users in order to detect regressions before the changes are available to all users.

At SoundCloud, we initially implemented this technique by building tooling to allow packaging and publishing release candidates into a canary environment and configuring load balancers to divert a small amount of production traffic to it. By comparing metrics between production and canary environments, our developers are able to identify faulty release candidates before they make it to production.

This was very convenient, but it came with some disadvantages. As it was a manual process and not integrated in the CD pipelines, it was easy for developers to forget to turn off old release candidates. Multiple versions receiving production traffic sometimes caused confusion and hard-to-debug issues. And although the process was documented, not everyone in the company was aware of the possibility of using canary environments. As a tedious manual process needed to be followed, it was only used for a very small number of releases. Finally, both the lack of traceability and allowing any developer to put arbitrary code into production without following standard quality checks came with a set of security and safety concerns.

Iteration 2: Canary Pipeline Integration

A natural next step was to integrate canaries into our deployment pipeline, thereby automating the process and ensuring it was used for every release. This also ensured that any code receiving production traffic had passed our standard quality processes and that the release candidate running in the canary environment was up to date.

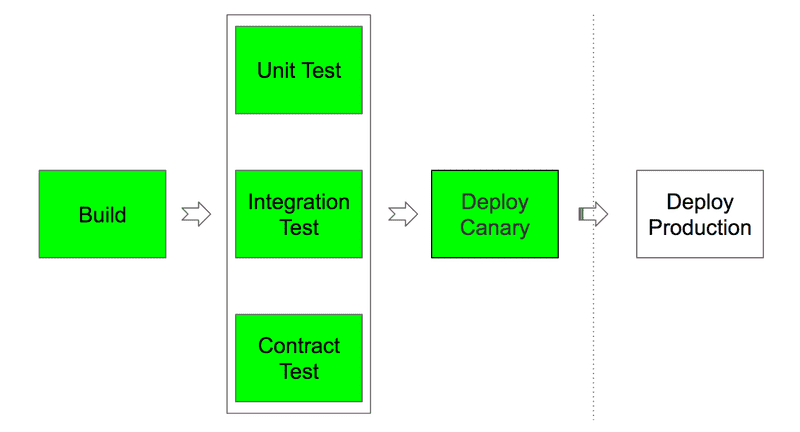

With these changes in effect, the pipeline looked like this:

Every change went through a canary step in which developers could manually compare metrics and identify issues before proceeding to a full production deploy.

This was a clear improvement over the previous situation, but it was still a manual process that relied on developers carefully looking at relevant graphs and spotting potential problems, which was time-consuming and error prone.

Iteration 3: Automated Canary Verification

In order to fully automate our deployments, we had to solve the problem of automatic canary verification. We soon realized we could leverage our existing monitoring and alerting infrastructure to accomplish just that.

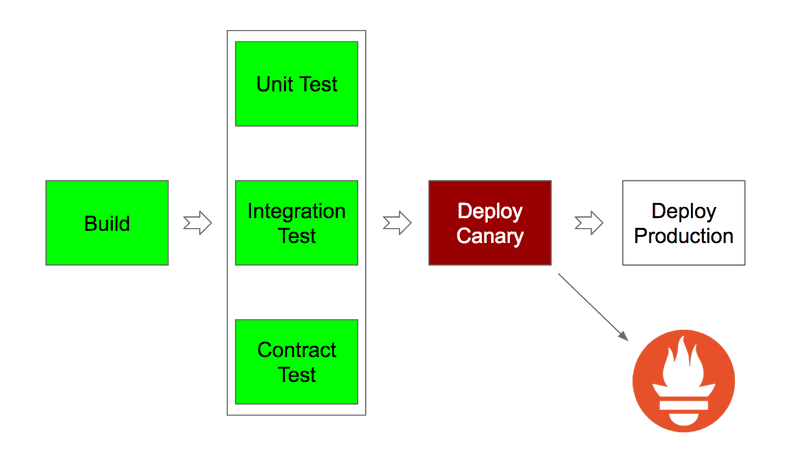

Every service at SoundCloud has a set of defined rules used to detect anomalies and undesired behaviors. With small modifications to the rule expressions, we were able to detect these conditions in each of our defined environments.

The missing piece was to query our monitoring system from the CD pipelines to automatically detect and roll back any change introducing regressions. This allowed us to remove manual intervention while ensuring the same standards of quality used for our production environments.

The resulting pipeline looked like this:

Conclusion

The addition of automatic canary deployments and verifications at SoundCloud was a massive success and has greatly improved our productivity and reliability. The confidence we have in automatically detecting any regression has removed the manual toil around deployment and enables our developers to focus on adding value without spending time babysitting deployments. When things inevitably go wrong, we now have yet another safety net that protects us from large-scale outages. Using automatic processes and removing human interaction to verify and roll back faulty deployments has greatly reduced our Mean Time to Detect (MTTD) and Mean Time to Recovery (MTTR).